A seminar against the Tevatron! March 20, 2009

Posted by dorigo in news, physics, science.Tags: CDF, DZERO, Higgs boson, LHC, Tevatron

comments closed

I spent this week at CERN to attend the meetings of the CMS week – an event which takes place four times a year, when collaborators of the CMS experiment, coming from all parts of the world, get together at CERN to discuss detector commissioning, analysis plans, and recent results. It was a very busy and eventful week, and only now, sitting on a train that brings me back from Geneva to Venice, can I find the time to report with the due dedication on some things you might be interested to know about.

One thing to report on is certainly the seminar I eagerly attended on Thursday morning, by Michael Dittmar (ETH-Zurich). Dittmar is a CMS collaborator, and he talked at the CERN theory division on a tickling subject:”Why I never believed in the Tevatron Higgs sensitivity claims for Run 2ab”. The title did promise a controversial discussion, but I was really startled by its level, as much as by the defamation of which I felt personally to be a target. I will explain this below.

I have also to mention that by Thursday I had already attended to a reduced version of his talk, since he had given it on the previous day in another venue. Both I and John Conway had corrected him on a few plainly wrong statements back then, but I was puzzled to see he reiterated those false statements in the longer seminar! More on that below.

Dittmar’s obnoxious seminar

Dittmar started by saying he was infuriated by the recent BBC article where “a statement from the director of a famous laboratory” claimed that the Tevatron had 50% odds of finding a Higgs boson, in a certain mass range. This prompted him to prepare a seminar to express his scepticism. However, it turned out that his scepticism was not directed solely at the optimistic statement he had read, but at every single result on Higgs searches that CDF and DZERO had produced since Run I.

In order to discuss sensitivity and significances, the speaker made a un-illuminating digression on how counting experiments can or cannot obtain observation-level significances with their data depending on the level of background of their searches and the associated systematical uncertainties. His statements were very basic and totally uncontroversial on this issue, but he failed to focus on the fact that nowadays, nobody does counting experiments any more when searching for evidence of a specific model: our confidence in advanced analysis methods involving neural networks, shape analysis, and likelihood discriminants; the tuning of Monte Carlo simulations; and the accurate analytical calculations of high-order diagrams for Standard Model processes, have all grown tremendously with years of practice and studies, and these methods and tools overcome the problems of searches for small signals immersed in large backgrounds. One can be sceptical, but one cannot ignore the facts, as the speaker seemed inclined to.

Then Dittmar said that in order to judge the value of sensitivity claims for the future, one may turn to past studies and verify their agreement with the actual results. So he turned to the Tevatron Higgs Sensitivity studies of 2000 and 2003, two endeavours to which I had participated with enthusiasm.

He produced a plot showing the small signal of decays that the Tevatron 2000 study believed the two experiments could achieve with 10 inverse femtobarns of data, expressing his doubts that the “tiny excess” could mean an evidence for Higgs production. On the side of that graph, he had for comparison placed a result of CDF on real Run I data, where a signal of WH or ZH decays to four jets had been searched in the dijet invariant mass distribution of the two b-jets.

He commented that figure by saying half-mockingly that the data could have been used to exclude the standard model process of associated production, since the contribution from Z decays to b-quark pairs was sitting at a mass where one bin had fluctuated down by two standard deviations with respect to the sum of background processes. This ridiculous claim was utterly unsupported by the plot -which had an overall very good agreement between data and MC sources- and by the fact that the bins adjacent to the downward-fluctuating one were higher than the prediction. I found this claim really disturbing, because it tried to denigrate my experiment with a futile and incorrect argument. But I was about to get more upset for his next statement.

In fact, he went on to discuss the global expectation of the Tevatron on Higgs searches, a graph (see below) produced in 2000 after a big effort from several tens of people in CDF and DZERO.

He started by saying that the graph was confusing, and that it was not clear in the documentation how it had been produced, nor that it was the combination of CDF and DZERO sensitivity. This was very amusing, since sitting from the far back John Conway, a CDF colleague, shouted: “It says it in print on top of it: combined thresholds!”, then adding, with a pacate voice “…In case you’re wondering, I made that plot.” John had in fact been the leader of the Tevatron Higgs sensitivity study, not to mention the author of many of the most interesting searches for the higgs boson in CDF since then.

Dittmar continued his surreal talk with an overbid, by claiming that the plot had been produced “by assuming a 30% improvement in the mass resolution of pairs of b-jets, when nobody had not even the least idea on how such improvement could be achieved”.

I could not have put together a more personal, direct attack to years of my own work myself! It is no mystery that I worked on dijet resonances since 1992, but of course I am a rather unknown soldier in this big game; however, I felt the need to interrupt the speaker at this point -exactly as I had done at the shorter talk the day before.

I remarked that in 1998, one year before the Tevatron sensitivity study, I had produced a PhD thesis and public documents showing the observation of a signal of decays in CDF Run I data, and had demonstrated on that very signal how the use of ingenuous algorithms could reduce by at least 30% the dijet mass resolution, making the signal more prominent. The relevant plots are below, directly from my PhD thesis: judge for yourself.

In the plots, you can see how the excess over background predictions moves to the right as more and more refined jet energy corrections are applied, starting from the result of generic jet energy corrections (top) to optimized corrections (bottom) until the signal becomes narrower and centered at the true value. The plots on the left show the data and the background prediction, those on the right show the difference, which is due to Z decays to b-quark jet pairs. Needless to say, the optimization is done on Monte Carlo Z events, and only then checked on the data.

So I said that Dittmar’s statement was utterly false: we had an idea of how to do it, we had proven we could do it, and besides, the plots showing what we had done had been indeed included in the Tevatron 2000 report. Had he overlooked them ?

Escalation!

Dittmar seemed unbothered by my remark, and he responded that that small signal had not been confirmed in Run II data. His statement constituted an even more direct attack to four more years of my research time, spent on that very topic. I kept my cool, because when your opponent offers you on a silver plate the chance to verbally sodomize him, you cannot be too angry with him.

I remarked that a signal had indeed been found in Run II, amounting to about 6000 events after all selection cuts; it confirmed the past results. Dittmar then said that “to the best of his knowledge” this had not been published, so it did not really count. I then explained it was a 2008 NIM publication, and would he please document himself before making such unsubstantiated allegations? He shrugged his shoulders, said he would look more carefully for the paper, and went back to his talk.

His points about the Tevatron sensitivity studies were laid down: for a low-mass Higgs boson, the signal is just too small and backgrounds are too large, and the sensitivity of real searches is below expectations by a large factor. To stress this point, he produced a slide containing a plot he had taken from this blog! The plot (see on the left), which is my own concoction and not Tevatron-approved material, shows the ratio between observed limit to Higgs production and the expectations of the 2000 study. He pointed at the two points for 100-140 GeV Higgs boson masses, trying to prove his claim: The Tevatron is now doing three times worse than expected. He even uttered “It is time to confess: the sensitivity study was wrong by a large factor!”.

His points about the Tevatron sensitivity studies were laid down: for a low-mass Higgs boson, the signal is just too small and backgrounds are too large, and the sensitivity of real searches is below expectations by a large factor. To stress this point, he produced a slide containing a plot he had taken from this blog! The plot (see on the left), which is my own concoction and not Tevatron-approved material, shows the ratio between observed limit to Higgs production and the expectations of the 2000 study. He pointed at the two points for 100-140 GeV Higgs boson masses, trying to prove his claim: The Tevatron is now doing three times worse than expected. He even uttered “It is time to confess: the sensitivity study was wrong by a large factor!”.

I could not help interrupting again: I had to stress that the plot was not approved material and was just a private interpretation of Tevatron results, but I did not deny its contents. The plot was indeed showing that low-mass searches were below par, but it was also showing that high-mass ones were amazingly in agreement with expectations worked at 10 years before. Then John Conway explained the low-mass discrepancy for the benefit of the audience, as he had done one day before for no apparent benefit of the speaker.

Conway explained that the study had been done under the hypothesis that an upgrade of our silicon detector would be financed by the DoE: it was in fact meant to prove the usefulness of funding an upgrade. A larger acceptance of inner silicon tracking boosts the sensitivity to identify b-quark jets from Higgs decays by a large factor, because any acceptance increase gets squared when computing the over-efficiency. So Dittmar could not really blame the Tevatron experiments for predicting something that would not materialize in a corresponding result, given that the DoE had denied the funding to build the upgraded detector!

I then felt compelled to add that by using my plot Dittmar was proving the opposite thesis of what he wanted to demonstrate: low-mass Tevatron searches were shown to underperform because of funding issues, rather than because of a wrong estimate of sensitivity; and high-mass searches, almost unhindered by the lack of an upgraded silicon, were in excellent agreement with expectations!

The speaker said that no, the high-mass searches were not in agreement, because their results could not be believed, and moved on to discuss those by taking real-data results by the Tevatron.

He said that the is a great channel at the LHC.

“Possible at the Tevatron ? I believe that the WW continuum background is much larger at a ppbar collider than at a pp collider, so my personal conclusion is that if the Tevatron people want to waste their time on it, good luck to them.”

Now, come on. I cannot imagine how a respectable particle physicist could drive himself into making such statements in front of a distinguished audience (which, have I mentioned it, included several theorists of the highest caliber, including none less than Edward Witten). Waste their time ? I felt I was wasting my time listening to him, but my determination of reporting his talk here kept me anchored to my chair, taking notes.

So this second part of the talk was not less unpleasant than the first part: Dittmar criticized the Tevatron high-mass Higgs results in the most incorrect, and scientifically dishonest, way that I could think of. Here is just a summary:

- He picked up a distribution of one particular sub-channel from one experiment, noting that it seemed to have the most signal-rich region showing a deficit of events. He then showed the global CDF+DZERO limit, which did not show a departure between expected and observed limit on Higgs cross section, and concluded that there was something fishy in the way the limit had been evaluated. But the limit is extracted from literally several dozens of those distributions -something he failed to mention despite having been warned of that very issue in advance.

- He picked up two neural-network output distributions for a search of Higgs at 160 and 165 GeV, and declared they could not be correct since they were very different in shape! John, from the back, replied “You have never worked with neural networks, have you ?” No, he had not. Had he, he would probably have understood that different mass points, optimized differently, can provide very different NN outputs.

- He showed another Neural Network output based on 3/fb of data, which had a pair of data points lying one standard deviation above the background predictions, and the corresponding plot for a search performed with improved statistics, which had instead a underfluctuation. He said he was puzzled by the effect. Again, some intervention from the audience was necessary, explaining that the methods are constantly reoptimized, and there is no wonder that adding more data can result in a different outcome. This produced a discussion when somebody from the audience tried to speculate that searches were maybe performed by looking at the data before choosing which method to use for a limit extraction! On the contrary of course, all Tevatron searches of the Higgs are blind analyses, where the optimization is performed on expected limits, using control samples, and Monte Carlo, and the data is only looked at afterwards.

- He showed that the Tevatron 2000 report had estimated a maximum Signal/Noise ratio for the H–>WW search of 0.34, and he picked up one random plot from the many searches of that channel by CDF and DZERO, showing that the signal to noise there was never larger than 0.15 or so. Explaining to him that the S/N of searches based on neural networks and combined discriminants is not a fixed value, and that many improvements have occurred in data analysis techniques in 10 years was useless.

Dittmar concluded his talk by saying that:

“Optimistic expectations might help to get funding! This is true, but it is also true that this approach eventually destroys some remaining confidence in science of the public.”.

His last slide even contained the sentence he had previously brought himself to uttering:

“It is the time to confess and admit that the sensitivity predictions were wrong”.

Finally, he encouraged LHC experiments to looking for the Higgs where the Tevatron had excluded it -between 160 and 170 GeV- because Tevatron results cannot be believed. I was disgusted: he most definitely places a strong claim on the prize of the most obnoxious talk of the year. Unfortunately for all, it was just as much an incorrect, scientifically dishonest, and dilettantesque lamentation, plus a defamation of a community of 1300 respected physicists.

In the end, I am really wondering what really moved Dittmar to such a disastrous performance. I think I know the answer, at least in part: he has been an advocate of the signature since 1998, and he must now feel bad for that beautiful process being proven hard to see, by his “enemies”. Add to that the frustration of seeing the Tevatron producing brilliant results and excellent performances, while CMS and Atlas are sitting idly in their caverns, and you might figure out there is some human factor to take into account. But nothing, in my opinion, can justify the mix he put together: false allegations, disregard of published material, manipulation of plots, public defamation of respected colleagues. I am sorry to say it, but even though I have nothing personal against Michael Dittmar -I do not know him, and in private he might even be a pleasant person-, it will be very difficult for me to collaborate with him for the benefit of the CMS experiment in the future.

Higgs decays to photon pairs! March 4, 2009

Posted by dorigo in news, physics, science.Tags: DZERO, Higgs boson, LHC, photons, standard model, Tevatron

comments closed

It was with great pleasure that I found yesterday, in the public page of the DZERO analyses, a report on their new search for Higgs boson decays to photon pairs. On that quite rare decay process -along with another not trivial decay, the reaction- the LHC experiments base their hopes to see the Higgs boson if that particle has a mass close to the LEP II upper bound, i.e. not far from 115 GeV. And this is the first high-statistics search for the SM Higgs in that final state to obtain results that are competitive with the more standard searches!

My delight was increased when I saw that results of the DZERO search are based on a data sample corresponding to a whooping 4.2 inverse-femtobarns of integrated luminosity. This is the largest set of hadron-collider data ever used for an analysis. 4.2 inverse femtobarns correspond to about three-hundred trillion collisions, sorted out by DZERO. Of course, both DZERO and CDF have so far collected more than that statistics: almost five inverse femtobarns. However, it always takes some time before calibration, reconstruction, and production of the newest datasets is performed… DZERO is catching up nicely with the accumulated statistics, it appears.

The most interesting few tens of billions or so of those events have been fully reconstructed by the software algorithms, identifying charged tracks, jets, electrons, muons, and photons. Yes, photons: quanta of light, only very energetic ones: gamma rays.

When photons have an energy exceeding a GeV or so (i.e. one corresponding to a proton mass or above), they can be counted and measured individually by the electromagnetic calorimeter. One must look for very localized energy deposits which cannot be spatially correlated with a charged track: something hits the calorimeter after crossing the inner tracker, but no signal is found there, implying that the object was electrically neutral. The shape of the energy deposition then confirms that one is dealing with a single photon, and not -for instance- a neutron, or a pair of photons traveling close to each other. Let me expand on this for a moment.

Background sources of photon signals

In general, every proton-antiproton collision yield dozens, or even hundreds of energetic photons. This is not surprising, as there are multiple significant sources of GeV-energy gamma rays to consider.

Electrons, as well as in principle any other electrically charged particle emitted in the collision, have the right to produce photons by the process called bremsstrahlung: by passing close to the electric field generated by a heavy nucleus, the particle emits electromagnetic radiation, thus losing a part of its energy. Note that this is a process which cannot happen in vacuum, since there are no target nuclei there to supply the electric field with which the charged particle interacts (one can have bremsstrahlung also in the presence of neutral particles, in principle, since what matters is the capability of the target to absorb a part of the colliding body’s momentum; but in that case, one needs a more complicated scattering process, so let us forget about it). For particles heavier than the electron, the process is suppressed up to the very highest energy (where particle masses are irrelevant with respect to their momenta), and is only worth mentioning for muons and pions in heavy materials.

Electrons, as well as in principle any other electrically charged particle emitted in the collision, have the right to produce photons by the process called bremsstrahlung: by passing close to the electric field generated by a heavy nucleus, the particle emits electromagnetic radiation, thus losing a part of its energy. Note that this is a process which cannot happen in vacuum, since there are no target nuclei there to supply the electric field with which the charged particle interacts (one can have bremsstrahlung also in the presence of neutral particles, in principle, since what matters is the capability of the target to absorb a part of the colliding body’s momentum; but in that case, one needs a more complicated scattering process, so let us forget about it). For particles heavier than the electron, the process is suppressed up to the very highest energy (where particle masses are irrelevant with respect to their momenta), and is only worth mentioning for muons and pions in heavy materials.- By far the most important process for photon creation at a collider is the decay of neutral hadrons. A high-energy collision at the Tevatron easily yields a dozen of neutral pions, and these particles decay more than 99% of the time into pairs of photons,

. Of course, these photons would only have an energy equal to half the neutral pion mass -0.07 GeV- if the neutral pions were at rest; it is only through the large momentum of the parent that the photons may be energetic enough to be detected in the calorimeter.

- A similar fate to that of neutral pions awaits other neutral hadrons heavier than the

: most notably the particle called eta, in the decay

. The eta has a mass four times larger than that of the neutral pion, and is less frequently produced.

- And other hadrons may produce photons in de-excitation processes, albeit not in pairs: excited hadrons often decay radiatively into their lower-mass brothers, and the radiated photon may display a significant energy, again critically depending on the parent’s speed in the laboratory.

All in all, that’s quite a handful of photons our detectors are showered with on an event-by-event basis! How the hell can DZERO sort out then, amidst over three hundred trillion collisions, the maybe five or ten which saw the decay of a Higgs to two photons ?

And the Higgs signal amounts to…

Five to ten events. Yes, we are talking of a tiny signal here. To eyeball how many standard model Higgs boson decays to photon pairs we may expect in a sample of 4.2 inverse femtobarns, we make some approximations. First of all, we take a 115 GeV Higgs for a reference: that is the Higgs mass where the analysis should be most sensitive, if we accept that the Higgs cannot be much lighter than that: for heavier higgses, their number will decrease, because the heavier a particle is, the less frequently it is produced.

The cross-section for the direct-production process (where with X we denote our unwillingness to specify whatever else may be produced together with the Higgs) is, at the Tevatron collision energy of 1.96 TeV, of the order of one picobarn. I am here purposedly avoiding to fetch a plot of the xs vs mass to give you the exact number: it is in that ballpark, and that is enough.

The other input we need is the branching ratio of H decay to two photons. This is the fraction of disintegrations yielding the final state that DZERO has been looking for. It depends on the detailed properties of the Higgs particle, which likes to couple to particles depending on the mass of the latter. The larger a particle’s mass, the stronger its coupling to the Higgs, and the more frequent the H decay into a pair of those: the branching fraction depends on the squared mass of the particle, but since the sum of all branching ratios is one -if we say the Higgs decays, then there is a 100% chance of its decaying into something, no less and no more!- any branching fraction depends on ALL other particle masses!!!

“Wait a minute,” I would like to hear you say now, “the photon is massless! How can the Higgs couple to it?!”. Right. H does not couple directly to photons, but it can nevertheless decay into them via a virtual loop of electrically charged particles. Just as happens when your US plug won’t fit into an european AC outlet! You do not despair, and insert an adaptor: something endowed with the right holes on one side and pins on the other. Much in the same way, a virtual loop of top quarks, for instance, will do a good job: the top has a large mass -so it couples aplenty to the Higgs- and it has an electric charge, so it is capable of emitting photons. The three dominant Feynman diagrams for the

“Wait a minute,” I would like to hear you say now, “the photon is massless! How can the Higgs couple to it?!”. Right. H does not couple directly to photons, but it can nevertheless decay into them via a virtual loop of electrically charged particles. Just as happens when your US plug won’t fit into an european AC outlet! You do not despair, and insert an adaptor: something endowed with the right holes on one side and pins on the other. Much in the same way, a virtual loop of top quarks, for instance, will do a good job: the top has a large mass -so it couples aplenty to the Higgs- and it has an electric charge, so it is capable of emitting photons. The three dominant Feynman diagrams for the decay are shown above: the first two of them involve a loop of W bosons, the third a loop of top quarks.

So, how much is the branching ratio to two photons in the end ? It is a complicated calculus, but the result is roughly one thousandth. One in a thousand low-mass Higgses will disintegrate into energetic light: two angry gamma rays, each roughly carrying the energy of a 2 milligram mosquito launched at the whooping speed of four inches per second toward your buttocks.

Now we have all the ingredients for our computation of the number of signal events we may be looking at, amidst the trillions produced. The master formula is just

where is the number of decays of the kind we want,

is the production cross section for Higgs at the Tevatron,

is the integrated luminosity on which we base our search, and B is the branching ratio of the decay we study.

With ,

, and

, the result is, guess what, 4.2 events. 4.2 in three hundred trillions. A needle in the haystack is a kids’ game in comparison!

The DZERO analysis

I will not spend much of my and your time discussing the details of the DZERO analysis here, primarily because this post is already rather long, but also because the analysis is pretty straightforward to describe at an elementary level: one selects events with two photons of suitable energy, computes their combined invariant mass, and compares the expectation for Higgs decays -a roughly bell-shaped curve centered at the Higgs mass and with a width of ten GeV or so- with the expected backgrounds from all the processes capable of yielding pairs of energetic photons, plus all those yielding fake photons. [Yes, fake photons: of course the identification of gamma rays is not perfect -one may have not detected a charged track pointing at the calorimeter energy deposit, for instance.] Then, a fit of the mass distribution extracts an upper limit on the number of signal events that may be hiding there. From the upper limit on the signal size, an upper limit is obtained on the signal cross-section.

Ok, the above was a bit too quick. Let me be slightly more analytic. The data sample is collected by an online trigger requiring two isolated electromagnetic deposits in the calorimeter. Offline, the selection requires that both photon candidates have a transverse energy exceeding 25 GeV, and that they be isolated from other calorimetric activity -a requirement which removes fake photons due to hadronic jets.

Further, there must be no charged tracks pointing close to the deposit, and a neural-network classifier is used to discriminate real photons from backgrounds using the shape of the energy deposition and other photon quality variables. The NN output is shown in the figure below: real photons (described by the red histogram) cluster on the right. A cut on the data (black points) of a NN output larger than 0.1 accepts almost all signal and removes 50% of the backgrounds (the hatched blue histogram). One important detail: the shape of the NN output for real high-energy photons is modeled by Monte Carlo simulations, but is found in good agreement with that of real photons in radiative Z boson decay processes, . In those processes, the detected photon is 100% pure!

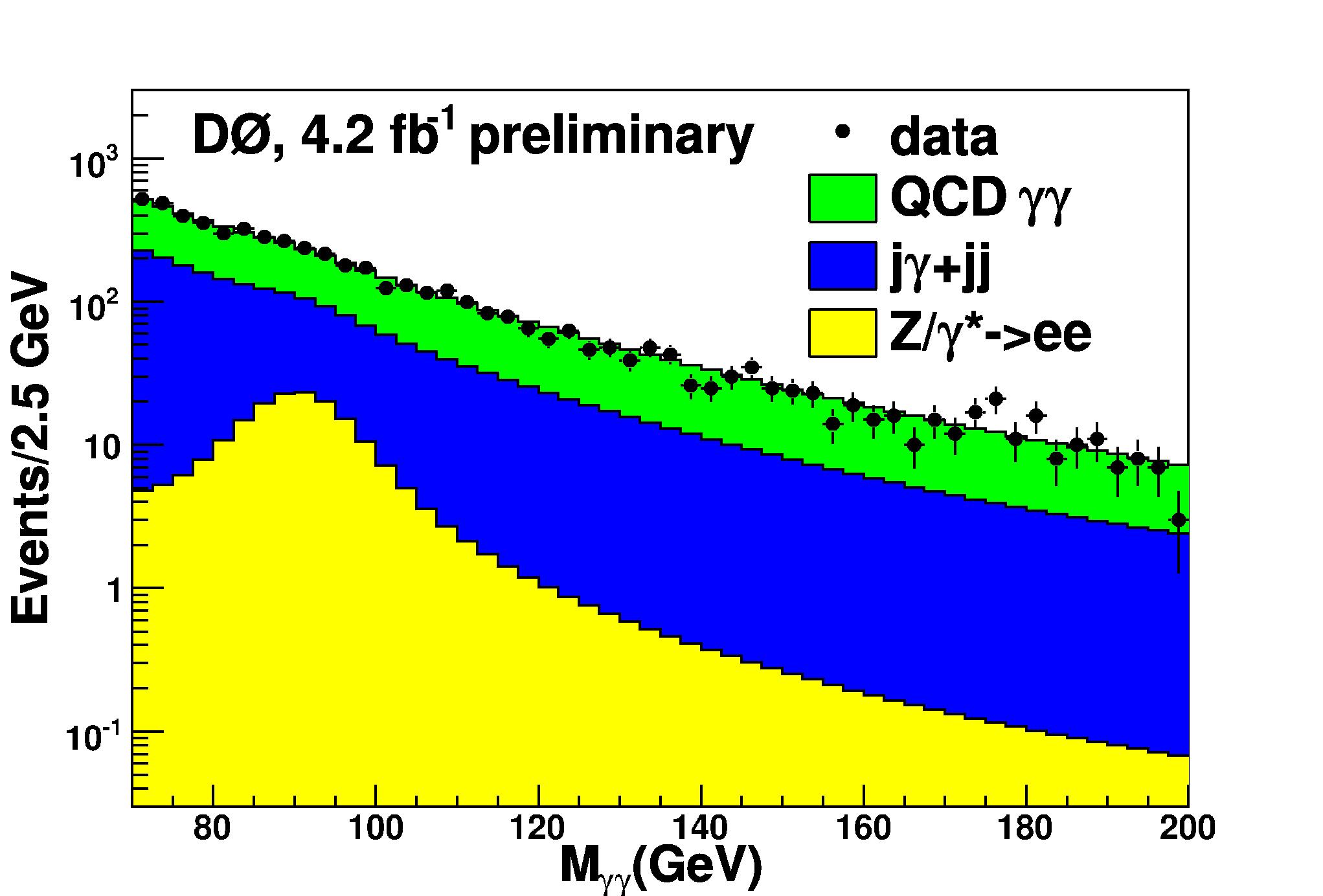

After the selection, surviving backgrounds are due to three main processes: real photon pairs produced by quark-antiquark interactions, compton-like gamma-jet events where the jet is mistaken for a photon, and Drell-Yan processes yielding two electrons, both of which are mistaken for photons. You can see the relative importance of the three sources in the graph below, which shows the diphoton invariant mass distribution for the data (black dots) compared to the sum of backgrounds. Real photons are in green, compton-like gamma-jet events are in blue, and the Drell-Yan contribution is in yellow.

The mass distribution has a very smooth exponential shape, and to search for Higgs events DZERO fits the spectrum with an exponential, obliterating a signal window where Higgs decays may contribute. The fit is then extrapolated into the signal window, and a comparison with the data found there provides the means for a measurement; different signal windows are assumed to search for different Higgs masses. Below are shown four different hypotheses for the Higgs mass, ranging from 120 to 150 GeV in 10-GeV intervals. The expected signal distribution, shown in purple, is multiplied by a factor x50 in the plots, for display purposes.

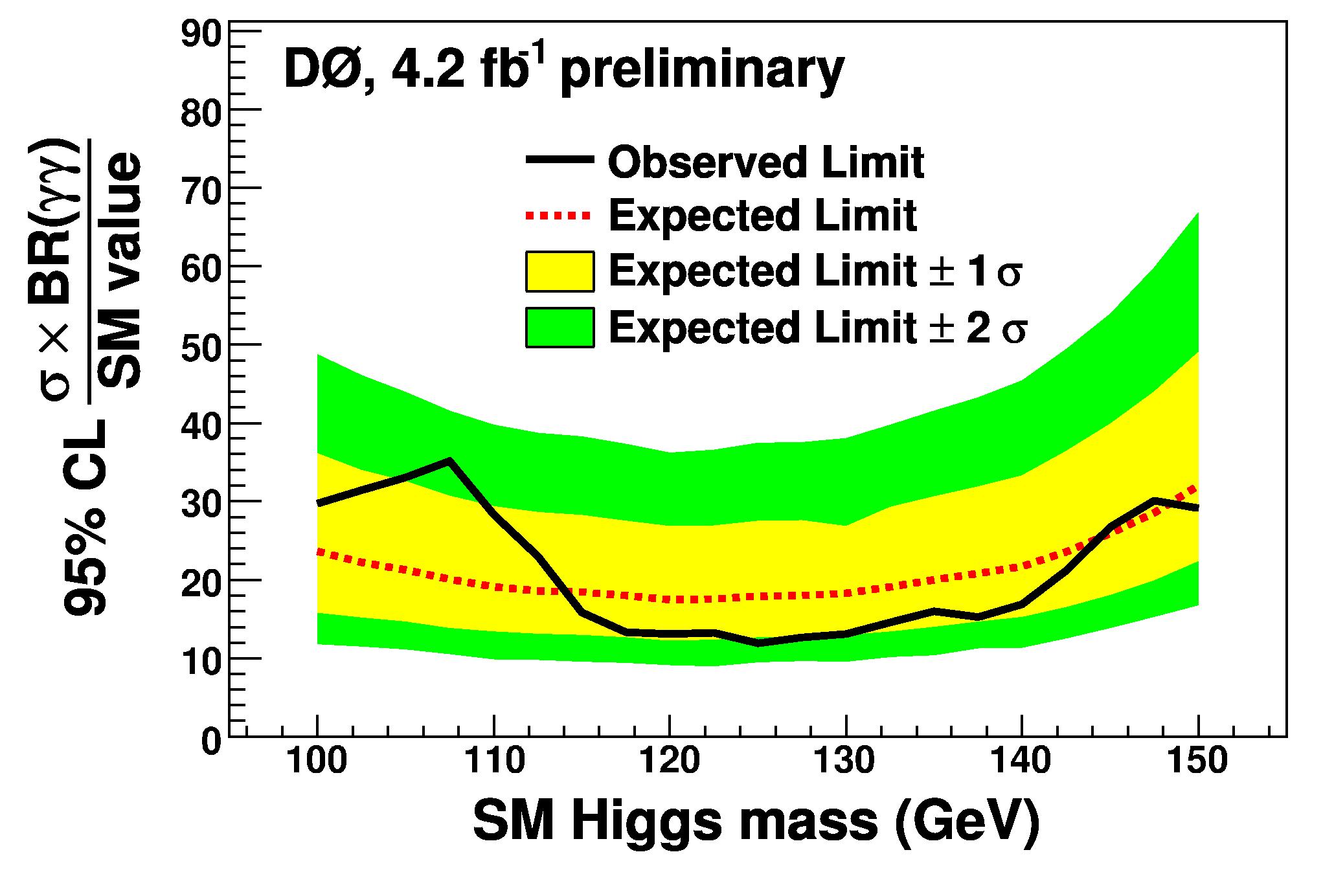

From the fits, a 95% upper limit on the Higgs boson production cross section is extracted by standard procedures. As by now commonplace, the cross-section limit is displayed by dividing it by the expected standard model Higgs cross section, to show how far one is from excluding the SM-produced Higgs at any mass value. The graph is shown below: readers of this blog may by now recognize at first sight the green 1-sigma and yellow 2-sigma bands showing the expected range of limits that the search was predicted to set. The actual limit is shown in black.

One notices that while this search is not sensitive to the Higgs boson yet, it is not so far from it any more! The LHC experiments will have a large advantage with respect to DZERO (and CDF) in this particular business, since there the Higgs production cross-section is significantly larger. Backgrounds are also larger, however, so a detailed understanding of the detectors will be required before such a search is carried out with success at the LHC. For the time being, I congratulate with my DZERO colleagues for pulling off this nice new result!

Anybody with an AAAS subscription willing to do me a favor ? February 20, 2009

Posted by dorigo in news, personal, physics, science.Tags: Higgs boson, journalism, LHC, science outreach, science reporting, Tevatron

comments closed

Here I am, once again improperly and shamelessly using this public arena for my personal gain. This time, I need help from one of you who has a subscription to the American Association for the Advancement of Science.

It so happens that a couple of weeks ago I gave a phone interview to Adrian Cho about the LHC, the Tevatron, and the hunt for the Higgs boson. We discussed various scenarios, the hunt going on at the Tevatron, and other stuff. I am curious to know what Adrian made of our half-hour chat. Today, I realized that the article has been published, but I have no access to the it, since it is available at the Science Magazine site only for AAAS members.

I have to say, Adrian should have been kind enough to forward me a copy of the piece that benefitted from the interiew. I am sure he forgot to do it and once he reads this he will regret it, or maybe he thinks I am a member of the AAAS already… Adrian, you are excused. But this leaves me without the article for a while, and I am a curious person… So if you have an AAAS account and you are willing to break copyright rules, I beg you to send me a file with the article! My email is dorigo (at) pd (dot) infn (dot) it. Thank you!

And, to show you just how serious I am when I say I am shameless, here’s more embarassment: if you are a big shot of the AAAS, do you by any chance give free membership to people who do science outreach to the sole benefit of the advancement of Science ?

UPDATE: I am always amazed by the power of internet and blogging. These days you just have to ask and you will be given! So, thanks to Peter and Senth, I got to read the article by Adrian Cho.

I must admit I am underwhelmed. Not by the article, which is incisive and to the point. Only, I should know that science journalists quote you for 1% of what you tell them, and use the rest to get informed and write a better piece. In fact, the piece starts by quoting me:

“Three years ago, nobody would have bet a lot that the Tevatron

would be competitive [with the LHC] in the Higgs search. Now I think the tables are almost turned,” says Tommaso Dorigo, a physicist from the University of Padua in Italy who works with the CDF particle detector fed by the Tevatron and the CMS particle detector fed by the LHC.

… but that is the only quote. I can console myself by noting I am in quite good company: experiment spokespersons, Fermilab director Pier Oddone, CERN spokesperson James Gillies…

New CDF Combination of Higgs limits! February 11, 2009

Posted by dorigo in news, physics, science.Tags: CDF, Higgs boson, LHC, Tevatron

comments closed

A brand-new combination of Higgs boson cross-section limits has been recently produced by the CDF experiment for the 2009 winter conferences. The results are almost one month old, but I decided to wait a bit before posting them here, in order to avoid arising bad feelings in a few of my CDF colleagues, those who believe I have no right to post here published results in too timely a fashion, because they feel those results should first be presented at conferences by the real authors of the analyses.

Now I think it is due time to have the most relevant plots here, since they are all available from a public web page of CDF anyway; so here we go, with the most updated information. Mind you, these are CDF-only results: a sizable improvement in the limits will come when they get combined with the findings of DZERO. I seem to understand that the Tevatron combination group folks are dragging their feet this year, so we have better to just as well take the CDF results and comment them alone.

The first graph is the most important one of all: it describes the combination of CDF results, in the usual “95% CL limit on times the SM cross section“. It is shown below.

On the x axis is the Higgs boson mass, and on the y axis the cross-section limit. Different colors of the curves refer to different analyses, which target the various decay channels of the sought particle; hatched lines show expected limits, and full ones show instead the limits actually obtained by the analysis.

As you can see by examining the thick red curve at the bottom, CDF by itself cannot rule out the 170-GeV point, which last summer was excluded by the CDF+D0 combination. However, a sizable improvement can be observed across the board in the results. The red curve, for one thing, is considerably flatter than it used to be, a sign that the low-mass searches have started to pitch in with momentum. Another thing to note is that these results correspond to 3.0/fb of analyzed luminosity or less (2.4/fb at low mass): there is already at least twice as much data waiting to be analyzed, and results are thus expected to sizably improve their sensitivity.

The above summary brings me to mention another important point. By looking at the graph, you might run the risk of failing to appreciate the enormous effort which CDF is putting into these searches. In truth, the name of the game is not “wait more data and turn the crank”. Quite the opposite! The most important improvements in the discovery reach have been achieved in the course of the last five years by continuously improving the algorithms, the search methods, by refining tools, by finding new avenues of investigation, and new search channels neglected before. This is summarized masterfully in the two graphs shown below.

Above, you can see that for a Higgs mass of 115 GeV, the limit that CDF was able to set on its existence, in terms of cross section (well, “times the SM cross section” units to be precise: the ones shown on the y axis) has improved much more than what one would have expected by scaling down the limit with a simple square root law -the one that Poisson Statistics would dictate, for statistically-limited measurements. Quite the opposite: as time went by, the actual limits (colored points) have moved down almost vertically, a sign that the data has been used better and better! Above, if you took the extrapolation expected after the first limit was published (the one in green), you would expect that the limit today, with 2.4/fb analyzed, was at 7xSM, while it in fact is at 3xSM: this corresponds to a 2.3x improvement in the limit, which would have been granted by a 5.2 times larger analyzed dataset!!

Above, the same information is shown for the value. In this case, CDF is now expected to be able to set an exclusion alone with 9/fb of data, but we still expect to see some improvements in the data analyses, which should move the points well into the brown band. In this case, 7/fb of data might be enough.

The last two plots I wish to discuss are shown below. BEWARE: This is information that LHC scientists would really, really not like to see – so, if your life depends on the success of ATLAS or CMS, please stop reading now, take my advice.

OK. The plot below shows the probability that the Tevatron experiments, by combining their datasets and results, may observe a 2-sigma evidence for SM Higgs production, with 5/fb and 10/fb of data collected by each. If the analyses will not perform better than what they have so far, you get the full lines -red for 5/fb, blue for 10/fb. If they improve as much as it is reasonable to predict, you get the hatched lines.

What to gather from the plot ? Well: it seems that, regardless of the Higgs boson mass, the Tevatron has a sizable chance to be able to say something good, by the time CDF and D0 will have analyzed the datasets they already possess (which are in excess of 5/fb each: the delivered luminosity of the Tevatron is passing the 6/fb mark as we speak, and the typical live time of the experiments is above 80%).

Below, we see what is the chance of a 3-sigma evidence. Again, there is a sizable chance of that happening, although if no additional improvements occur in the analyses, it seems that the Tevatron will need to get lucky!

I remember that in 2005 I gave a talk in Corfù (Greece) where I ventured to speculate on the possible scenarios for Higgs searches at the Tevatron and the LHC. One of the scenarios saw the two experiments competing to find the particle with roughly equal reach, and eventually producing a combined observation. That possibility does not seem too far-fetched any longer!

In the next few days I plan to discuss in some detail the most important analyses which contribute to the combination discussed above. Stay tuned…

What’s hot around February 10, 2009

Posted by dorigo in astronomy, Blogroll, cosmology, internet, italian blogs, mathematics, news, physics, science.Tags: Blogroll, LHC, pioneer anomaly, quirks, singularity, turbulence

comments closed

For lack of interesting topics to blog about, I refer you to a short list of bloggers who have produced readable material in the last few days:

- The always witty Resonaances has produced an informative post on Quirks.

- My friend David Orban describes the recently-instituted singularity University

- Stefan explains other types of singularities, those you can find in your kitchen!

- Dmitry has an outstanding post out today about the physics of turbulence, with four mini-pieces on the Reynolds number, viscosity, universality and intermittency. Worth a visit, if even just for the pics!

- Marco discusses the long winter of LHC. Sorry, in italian.

- Peter discusses the same issue in English.

- Marni points out a direct explanation of the Pioneer anomaly with the difference between atomic clock time and astronomical time. Or, if you will, a change of the speed of light with time!

CMS and extensive air showers: ideas for an experiment February 6, 2009

Posted by dorigo in astronomy, cosmology, physics, science.Tags: anomalous muons, ATIC, CDF, CMS, cosmic rays, DELPHI, LHC, Pamela

comments closed

The paper by Thomas Gehrmann and collaborators I cited a few days ago has inspired me to have a closer look at the problem of understanding the features of extensive air showers – the phenomenon of a localized stream of high-energy cosmic rays originated by the incidence on the upper atmosphere of a very energetic proton or light nucleus.

Layman facts about cosmic rays

While the topic of cosmic rays, their sources, and their study is largely terra incognita to me -I only know the very basic facts, having learned them like most of you from popularization magazines-, I do know that a few of their features are not too well understood as of yet. Let me mention only a few issues below, with no fear of being shown how ignorant on the topic I am:

- The highest-energy cosmic rays have no clear explanation in terms of their origin. A few events with energy exceeding $10^{20} eV$ have been recorded by at least a couple of experiments, and they are the subject of an extensive investigation by the Pierre Auger observatory.

- There are a number of anomalies on their composition, their energy spectrum, the composition of the showers they develop. The data from PAMELA and ATIC are just two recent examples of things we do not understand well, and which might have an exotic explanation.

- While models of their formation suppose that only light nuclei -iron at most- are composing the flux of primary hadrons, some data (for instance this study by the Delphi collaboration) seems to imply otherwise.

The paper by Gehrmann addresses in particular the latter point. There appears to be a failure in our ability to describe the development of air showers producing very large number of muons, and this failure might be due to modeling uncertainties, heavy nuclei as primaries, or the creation of exotic particles with muonic decay, in decreasing order of likelihood. For sure, if an exotic particle like the 300 GeV one hypothesized in the interpretation paper produced by the authors of the CDF study of multi-muon events (see the tag cloud on the right column for an extensive review of that result) existed, the Tevatron would not be the only place to find it: high-energy cosmic rays would produce it in sizable amounts, and the observed multi-muon signature from its decay in the atmosphere might end up showing in those air showers as well!

Mind you, large numbers of muons are by no means a surprising phenomenon in high-energy cosmic ray showers. What happens is that a hadronic collision between the primary hadron and a nucleus of nitrogen or oxygen in the upper atmosphere creates dozens of secondary light hadrons. These in turn hit other nuclei, and the developing hadronic shower progresses until the hadrons fall below the energy required to create more secondaries. The created hadrons then decay, and in particular ,

decays will create a lot of muons.

Muons have a lifetime of two microseconds, and if they are energetic enough, they can travel many kilometers, reaching the ground and whatever detector we set there. In addition, muons are very penetrating: a muon needs just 52 GeV of energy to make it 100 meters underground, through the rock lying on top of the CERN detectors. Of course, air showers include not just muons, but electrons, neutrinos, and photons, plus protons and other hadronic particles. But none of these particles, except neutrinos, can make it deep underground. And neutrinos pass through unseen…

Now, if one reads the Delphi publication, as well as information from other experiments which have studied high-multiplicity cosmic-ray showers, one learns a few interesting facts. Delphi found a large number of events with so many muon tracks that they could not even count them! In a few cases, they could just quote a lower limit on the number of muons crossing the detector volume. One such event is shown on the picture on the right: they infer that an air shower passed through the detector by observing voids in the distribution of hits!

Now, if one reads the Delphi publication, as well as information from other experiments which have studied high-multiplicity cosmic-ray showers, one learns a few interesting facts. Delphi found a large number of events with so many muon tracks that they could not even count them! In a few cases, they could just quote a lower limit on the number of muons crossing the detector volume. One such event is shown on the picture on the right: they infer that an air shower passed through the detector by observing voids in the distribution of hits!

The number of muons seen underground is an excellent estimator of the energy of the primary cosmic ray, as the Kascade collaboration result shown on the left shows (on the abscissa is the logarithm of the energy of the primary cosmic ray, and on the y axis the number of muons per square meter measured by the detector). But to compute energy and composition of cosmic rays from the characteristics we observe on the ground, we need detailed simulations of the mechanisms creating the shower -and these simulations require an understanding of the physical processes at the basis of the productions of secondaries, which are known only to a certain degree. I will get back to this point, but here I just mean to point out that a detector measuring the number of muons gets an estimate of the energy of the primary nucleus. The energy, but not the species!

The number of muons seen underground is an excellent estimator of the energy of the primary cosmic ray, as the Kascade collaboration result shown on the left shows (on the abscissa is the logarithm of the energy of the primary cosmic ray, and on the y axis the number of muons per square meter measured by the detector). But to compute energy and composition of cosmic rays from the characteristics we observe on the ground, we need detailed simulations of the mechanisms creating the shower -and these simulations require an understanding of the physical processes at the basis of the productions of secondaries, which are known only to a certain degree. I will get back to this point, but here I just mean to point out that a detector measuring the number of muons gets an estimate of the energy of the primary nucleus. The energy, but not the species!

As I was mentioning, the Delphi data (and that of other experiments, too) showed that there are too many high-muon-multiplicity showers. The graph on the right shows the observed excess at very high muon multiplicities (the points on the very right of the graph). This is a 3-sigma effect, and it might be caused by modeling uncertainties, but it might also mean that we do not understand the composition of the primary cosmic rays: yes, because if a heavier nucleus has a given energy, it usually produces more muons than a lighter one.

As I was mentioning, the Delphi data (and that of other experiments, too) showed that there are too many high-muon-multiplicity showers. The graph on the right shows the observed excess at very high muon multiplicities (the points on the very right of the graph). This is a 3-sigma effect, and it might be caused by modeling uncertainties, but it might also mean that we do not understand the composition of the primary cosmic rays: yes, because if a heavier nucleus has a given energy, it usually produces more muons than a lighter one.

The modeling uncertainties are due to the fact that the very forward production of hadrons in a nucleus-nucleus collision is governed by QCD at very small energy scales, where we cannot calculate the theory to a good approximation. So, we cannot really compute with the precision we would like how likely it is that a 1,000,000-TeV proton, say, produces a forward-going 1-TeV proton in the collision with a nucleus of the atmosphere. The energy distribution of secondaries produced forwards is not so well-known, that is. And this reflects in the uncertainty on the shower composition.

Enter CMS

Now, what does CMS have to do with all the above ? Well. For one thing, last summer the detector was turned on in the underground cavern at Point 5 of LHC, and it collected 300 million cosmic-ray events. This is a huge data sample, warranted by the large extension of the detector, and the beautiful working of its muon chambers (which, by the way, have been designed by physicists of Padova University!). Such a large dataset already includes very high-multiplicity muon showers, and some of my collaborators are busy analyzing that gold mine. Measurements of the cosmic ray properties are ongoing.

One might hope that the collection of cosmic rays will continue even after the LHC is turned on. I believe it will, but only during the short periods when there is no beam circulating in the machine. The cosmic-ray data thus collected is typically used to keep the system “warm” while waiting for more proton-proton collisions, but it will not be a orders-of-magnitude increase in statistics with respect to what has been already collected last summer.

The CMS cosmic-ray data can indeed provide an estimate of several characteristics of the air showers, but it will not be capable of providing results qualitatively different from the findings of Delphi -although, of course, it might provide a confirmation of simulations, disproving the excess observed by that experiment. The problem is that very energetic events are rare -so one must actively pursue them, rather than turning on the cosmic ray data collection when not in collider mode. But there is one further important point: since only muons are detected, one cannot really understand whether the simulation is tuned correctly, and one cannot achieve a critical additional information: the amount of energy that the shower produced in the form of electrons and photons.

The electron- and photon-component of the air shower is a good discriminant of the nucleus which produced the primary interaction, as the plot on the right shows. It in fact is a crucial information to rule out the presence of nuclei heavier than iron, or the composition of primaries in terms of light nuclei. Since the number of muons in high-multiplicity showers is connected to the nuclear species as well, by determining both quantities one would really be able to understand what is going on. [In the plot, the quantity Y is plotted as a function of the primary cosmic ray energy. Y is the ratio between the logarithm of the number of detected muons and electrons. You can observe that Y is higher for iron-induced showers (the full black squares)].

The electron- and photon-component of the air shower is a good discriminant of the nucleus which produced the primary interaction, as the plot on the right shows. It in fact is a crucial information to rule out the presence of nuclei heavier than iron, or the composition of primaries in terms of light nuclei. Since the number of muons in high-multiplicity showers is connected to the nuclear species as well, by determining both quantities one would really be able to understand what is going on. [In the plot, the quantity Y is plotted as a function of the primary cosmic ray energy. Y is the ratio between the logarithm of the number of detected muons and electrons. You can observe that Y is higher for iron-induced showers (the full black squares)].

Idea for a new experiment

The idea is thus already there, if you can add one plus one. CMS is underground. We need a detector at ground level to be sensitive to the “soft” component of the air shower- the one due to electrons and photons, which cannot punch through more than a meter of rock. So we may take a certain number of scintillation counters, layered alternated with lead sheets, all sitting on top of a thicker set of lead bricks, underneath which we may set some drift tubes or, even better, resistive plate chambers.

We can build a 20- to 50-square meter detector this way with a relatively small amount of money, since the technology is really simple and we can even scavenge material here and there (for instance, we can use spare chambers for the CMS experiment!). Then, we just build a simple logic of coincidences between the resistive plate chambers, imposing that several parts of our array fires together at the passage of many muons, and send the triggering signal 100 meters down, where CMS may be receiving a “auto-accept” to read out the event regardless of the presence of a collision in the detector.

The latter is the most complicated thing to do of the whole idea: to modify existing things is always harder than to create new ones. But it should not be too hard to read out CMS parasitically, and collect at very low frequency those high-multiplicity showers. Then, the readout of the ground-based electromagnetic calorimeter should provide us with an estimate of the (local) electron-to-muon ratio, which is what we know to determine the weight of the primary nucleus.

If the above sounds confusing, it is entirely my fault: I have dumped here some loose ideas, with the aim of coming back here when I need them. After all, this is a log. a Web log, but always a log of my ideas… But I wish to investigate more on the feasibility of this project. Indeed, CMS will for sure pursue cosmic-ray measurements with the 300M events it has already collected. And CMS does have spare muon chambers. And CMS does have plans of storing them at Point 5… Why not just power them up and build a poor man’s trigger ? A calorimeter might come later…

Black holes hype does not decay February 3, 2009

Posted by dorigo in astronomy, Blogroll, cosmology, humor, news, physics, politics, religion, science.Tags: black holes, doomsday, LHC

comments closed

While the creation of black holes in the high-energy proton-proton collisions that LHC will hopefully start providing this fall is not granted, and while the scientific establishment is basically unanimous in claiming that those microscopical entities would anyway decay in a time so short that even top quarks look longevous in comparison, the hype about doomsday being unwittingly delivered by the hands of psychotic, megalomaniac CERN scientists continues unhindered.

Here are a few recent links on the matter (thanks to M.M. for pointing them out):

The source of the renewed fire appears to be a paper published on the arxiv a couple of weeks ago. In it, the authors (R. Casadio, S. Fabi, and B. Harms) discuss a very specific model (a warped brane-world scenario), in whose context microscopic black holes might have a chance to survive for a few seconds.

Never mind the fact that the authors say from the very abstract, as if feeling the impending danger of being strumentalized, “we argue against the possibility of catastrophic black hole growth at the LHC“. This is not the way it should be done: you cannot assume a very specific model, and then draw general conclusions, because others opposing your view may always use the same crooked logic and reverse the conclusions. However, I understand that the authors made a genuine effort to try and figure out what could be the phenomenology of microscopic black holes created in the scenario they considered.

The accretion of a black hole may occur via direct collision with matter and via gravitational interactions with it. For microscopic black holes, however, the latter (called Bondi accretion) is basically negligible. The authors compute the evolution of the mass of the BH as a function of time for different values of a critical mass parameter , which depends on the model and is connected to the characteristic thickness of the brane. They explicitly make two examples: in the first, when

, a 10 TeV black hole, created with 5 TeV/c momentum, is shown to decay with a roughly exponential law, but with lifetime much longer -of the order of a picosecond- than that usually assumed for a micro-BH evaporating through Hawking radiation. In the second case, where

, the maximum BH mass is reached at

after about one second. Even in this scenario, the capture radius of the object is very small, and the object decays with a lifetime of about 100 seconds. The authors also show that “there is a rather narrow range of parameters […] for which RS black holes produced at the LHC would grow before evaporating“.

In the figure on the right, the 10-base logarithm of the maximum distance traveled by the black hole (expressed in meters) is computed as a function of the 10-base logarithm of the critical mass (expressed in kilograms), for a black hole of 10 TeV mass produced by the LHC with a momentum of 5 TeV/c. As you can see, if the critical mass parameter is large enough, these things would be able to reach you in your bedroom. Scared ? Let’s read their conclusions then.

In the figure on the right, the 10-base logarithm of the maximum distance traveled by the black hole (expressed in meters) is computed as a function of the 10-base logarithm of the critical mass (expressed in kilograms), for a black hole of 10 TeV mass produced by the LHC with a momentum of 5 TeV/c. As you can see, if the critical mass parameter is large enough, these things would be able to reach you in your bedroom. Scared ? Let’s read their conclusions then.

“[…] Indeed, in order for the black holes created at the LHC to grow at all, the critical mass should be

. This value is rather close to the maximum compatible with experimental test of Newton’s law, that is

(which we further relaxed to

in our analysis). For smaller values of

, the black holes cannot accrete fast enough to overcome the decay rate. Furthermore , the larger

is taken to be, the longer a black hole takes to reach its maximum value and the less time it remains near its maximum value before exiting the Earth.

We conclude that, for the RS scenario and black holes decribed by the metric [6], the growth of black holes to catastrophic size does not seem possible. Nonetheless, it remains true that the expected decay times are much longer (and possibly >>1 sec) than is typically predicted by other models, as was first shown in [4]”.

Here are some random reactions I collected from the physics arxiv blog -no mention of the author’s names, since they do not deserve it:

- This is starting to get me nervous.

- Isn’t the LHC in Europe? As long as it doesn’t suck up the USA, I’m fine with it.

- It is entirely possible that the obvious steps in scientific discovery may cause intelligent societies to destroy themselves. It would provide a clear resolution to the Fermi paradox.

- I’m pro science and research, but I’m also pro caution when necessary.

- That’s what I asked and CERN never replied. My question was: “Is it possible that some of these black might coalesce and form larger black holes? larger black holes would be more powerful than their predecessors and possibly aquire more mass and grow still larger.”

- The questions is, whether these scientists are competent at all, if they haven’t made such analysis a WELL BEFORE the LHC project ever started.

- I think this is bad. American officials should do something about this because if scientists do end up destroying the earth with a black hole it won’t matter that they were in Europe, America will get the blame. On the other hand, if we act now to be seen dealing as a responsible member of the international community, then, if the worst happens, we have a good chance of pinning it on the Jews.

- The more disturbing fact about all this is the billions and billions being spent to satisfy the curiosity of a select group of scientists and philosophers. Whatever the results will yield little real-world benefit outside some incestuous lecture circuit.

- “If events at the LHC swallow Switzerland, what are we going to do without wrist watches and chocolate?” Don’t worry, we’ll still have Russian watches. they’re much better, faster even.

It goes on, and on, and on. Boy, it is highly entertaining, but unfortunately, I fear this is taking a bad turn for Science. I tend to believe that on this particular issue, no discussion would be better than any discussion -it is like trying to argue with a fanatic about the reality of a statue of the Virgin weeping blood.

… So, why don’t we just shut up on this particular matter ?

Hmm, if I post this, I would be going against my own suggestion. Damned either way.

Babysitting this week February 1, 2009

Posted by dorigo in news, personal, physics.Tags: anomalous muons, CDF, CMS, hidden valley, LHC

comments closed

Blogging is one of the activities that will get slightly reduced this week, along with others that are not strictly necessary for my survival. Mariarosa has left for Athens this morning with three high-school classes of her school, Liceo Foscarini. They will visit Greece for a whole week, and be back to Venice on Saturday.

I am not scared by the obligation of having to care for my two kids, and I do like such challenges -I maintain that my wife should not complain too much when it is me who leaves for a week, much more frequently- but of course the management of our family life will take all of my spare time, plus some.

Blogging material, in the meantime, is piling up. There are beautiful results coming out of CDF these days (isn’t that becoming a rule?). Furthermore, recently the Tevatron has been running excellently, and the LHC seems in the middle of a crisis over whether to risk a second, colossal failure by pushing the energy up to 10 TeV to put the Tevatron off the table in the shortest time possible, or to play it safe and keep the collision energy at 6 TeV, accepting the risk of being scooped of the most juicy bits of physics left over to party with.

And multi-muons keep me busy these days. Besides the starting analysis in the CMS-Padova group, there are papers worth discussing in the arxiv. This one was published a few days ago, and we had in Padova last Thursday one of the authors, Thomas Gehrmann, discussing QCD calculations of event shapes observables in a seminar- which of course allowed me to chat with him about his hunch on the hidden valley scenarios he discusses in his paper. More on these things next week, after I set my kids to sleep!

Information control from CERN January 27, 2009

Posted by dorigo in news, physics, science.Tags: cern, LHC, science outreach, scientific blogging

comments closed

A piece by Matthew Chalmers titled “CERN: the view from inside” has appeared yesterday on Physics World’s web site. It is an insightful interview to James Gillies, head of communications at CERN.

The interview focuses mostly on the media coverage of the LHC startup of last September 10th, and the steps that made it a global success, with an estimated exposure of one billion people. The point is made that now “LHC” can be used out of context without problems, but I hope the revenues to science are larger than that.

More interesting to us science bloggers is the description of how information on the September 19th incident was provided by CERN, and the measures that were put in place to prevent unwanted, uncontrolled news from leaking out in blogs and other unauthorized media. The LHC logbook was edited, pictures of the incident were password-protected. I do not think this is too worrysome: the management decided it was the best thing to do under the exceptional circumstances, and I do not blame them for being tight.

The piece ends up discussing the restrictive policy of the lab and its experiments to blogging. The point is made that unconfirmed rumors damage science, but the matter is not really discussed in detail in any way. People keep claiming that discussion of unconfirmed signals is nocuous to Science, but I continue to hear that it is nocuous to their interests. Do director generals want to be the ones releasing important lab information to protect us, or to protect their chair ? Do principal investigators insist that results are released only after a publication is sent to the journal to avoid waves of imprecise physics from being distributed to unarmed citizens, or to increase their exposure when they make an announcement ?

I insist on being naive on this matter. I think that scientific results on basic science do not belong to their discoverers, nor to the experimental collaboration: they, as much as the data they are based upon, belong to the people.

In the Physics World interview, Gillies claims that the lab will act to counter the public discussion of not-yet-confirmed three-sigma effects (the article mentions this corresponds to a “less than 1% chance for a statistical fluke”, but I guess it was Matthew to get this inaccurate to simplify matters for his numerically-challenged audience -the probability is actually 0.3%). Well, I think the laboratory will have to be very careful to get down to the level of bloggers: the CERN management seems to talk and think as if blogging was a controllable phenomenon, but believe me, it ain’t. Not until they close the whole internet thing down.

In May 2007 an anonymous comment left in my blog on a large signal of supersymmetric Higgs decays seen by the D0 collaboration in events with four b-quark jets started a runaway phenomenon which ended on the New York Times and on Slate, plus other media around the world. The D0 collaboration was not happy about it, but what could they do ? The answer is simple: nothing. I wonder whether the CERN experiments have aces up their sleeves instead…

Guess the function: results January 21, 2009

Posted by dorigo in physics, science.Tags: bremsstrahlung, CMS, LHC, PDF, QCD, QED, Z boson

comments closed

Thanks to the many offers for help received a few days ago, when I asked for hints on possible functional forms to interpolate a histogram I was finding hard to fit, I have successfully solved the problem, and can now release the results of my study.

The issue is the following one: at the LHC, Z bosons are produced by electroweak interactions, through quark-antiquark annihilation. The colliding quarks have a variable energy, determined by probability density functions (PDF) which determine how much of the proton’s energy they carry; and the Z boson has a resonance shape which has a sizable width: 2.5 GeV, for a 91 GeV mass. The varying energy of the center of mass, determined by the random value of quark energies due to the PDF, “samples” the resonance curve, creating a distortion in the mass distribution of the produced Z bosons.

The above is not the end of the story, but just the beginning: in fact, there are electromagnetic corrections (QED) due to the radiation of photons, both “internally” and by the two muons into which the Z decays (I am focusing on that final state of Z production: a pair of high-momentum muons from ). Also, electromagnetic interactions cause a interference with Z production, because a virtual photon may produce the same final state (two muons) by means of the so-called “Drell-Yan” process. All these effects can only be accounted for by detailed Monte Carlo simulations.

Now, let us treat all of that as a black box: we only care to describe the mass distribution of muon pairs from Z production at the LHC, and we have a pretty good simulation program, Horace (developed by four physicists in Pavia University: C.M. Carloni Calame, G. Montagna, O. Nicrosini and A. Vicini), which handles the effects discussed above. My problem is to describe with a simple function the produced Z boson lineshape (the mass distribution) in different bins of Z rapidity. Rapidity is a quantity connected to the momentum of the particle along the beam direction: since the colliding quarks have variable energies, the Z may have a high boost along that direction. And crucially, depending on Z rapidity, the lineshape varies.

In the post I published here a few days ago I presented the residual of lineshape fits which used the original resonance form, neglecting all PDF and QED effects. By fitting those residuals with a proper parametrized function, I was trying to arrive at a better parametrization of the full lineshape.

After many attempts, I can now release the results. The template for residuals is shown below, interpolated with the function I obtained from an advice by Lubos Motl:

After multiplying that function by the original Breit-Wigner resonance function, I could fit the 24 lineshapes extracted from a binning in rapidity. This produced additional residuals, which are of course much smaller than the first-order ones above, and have a sort of parabolic shape this time. A couple of them are shown on the right.

After multiplying that function by the original Breit-Wigner resonance function, I could fit the 24 lineshapes extracted from a binning in rapidity. This produced additional residuals, which are of course much smaller than the first-order ones above, and have a sort of parabolic shape this time. A couple of them are shown on the right.

I then interpolated those residuals with parabolas, and extracted their fit parameters. Then, I could parametrize the parameters, as the graph below shows: the three degrees of freedom have roughly linear variations with Z rapidity. The graphs show the five parameter dependences on Z rapidity (left column) for lineshapes extracted with the CTEQ set of parton PDF; for MRST set (center column); and the ratio of the two parametrization (right column), which is not too different from 1.0.

Finally, the 24 fits which use the shape, with now all of the rapidity-dependent parameters fixed, are shown below (the graph shows only one fit, click to enlarge and see all of them together).

The function used is detailed in the slide below:

I am rather satisfied by the result, because the residuals of these final fits are really small, as shown on the right: they are certainly smaller than the uncertainties due to PDF and QED effects. The function above will now be used to derive a parametrization of the probability that we observe a dimuon pair with a given mass

at a rapidity

, as a function of the momentum scale in the tracker and the muon momentum resolution.